1: Text Data Processing¶

Introduction¶

In this first section of the NLP module, we will focus on how to process text data. If you are used to doing data science on other topics there might be a lot of unfamilliar methods and names here, but don’t worry - we will go deeper and explain them. Once you have seen and understood all of the different main components of a text data analysis pipeline, you will see that almost any NLP problem will have roughly the same elements in pre-processing.

Additional information

One of the important things that an aspiring data scientist should realise is that doing data science in the real-world is a much harder process than in the classroom on toy datasets. Most of the coursework available is focused on toy datasets which are often already processed, and a business question which has already been validated and posed. As you can imagine real world data science work is much more difficult than this. Some lessons on what you could expect to need in the real world NLP use cases are provided here.

Applied NLP: Lessons from the Field (20 minutes)

Essential NLP Libraries¶

Let’s get started by adding the required packages for our work. We start off with pandas, which is by far the mostly wide-spread library for data processing in Python. It works for a variety of data types, and text data is no exception.

import pandas as pd

The following piece of code makes sure that we have all the colummns visible in the interactive Jupyter output. If you don’t specify this option, at the moment if you print the .head() of the dataframe, instead of having all columns displayed, the ones in the middle will be truncated - replaced by ....

pd.set_option('display.max_colwidth', 100)

Exercise

As an exercise, try to find what setting is going to help you display the cells in a dataframe without truncating the text.

The next libary that we will be using is nltk, standing for “Natural Language Toolkit”. It is one of the older libraries on our list, but is still quite widely used for NLP tasks, is recently updated and has a plethora of powerful methods that cover a wide range of topics, from general to domain-specific.

Blended learning

NLTK Book Read Chapters 1, 2 and 3 (3 hours)

Getting Started with NLTK Tutorial (1 hour)

import nltk

from nltk.corpus import stopwords

from nltk.stem import PorterStemmer, WordNetLemmatizer

from nltk.tag import DefaultTagger

from nltk.corpus import treebank

Note that there is one additional step that you need to take after installing the nltk python package - you have to download the corpora. Start the interpreter (just type python3 in your venv terminal), and then use the following commands:

>>> import nltk

>>> nltk.download()

This should open up a GUI where you can configure which corpora you need. It is recommended that you download all of them just in case.

Exercise

Is there a way to do this from the command line directly (without going into the Python REPL)?

Another useful package that we will use is tqdm. This handy tool allows us to have a progress bar indicator when running Python functions. This is very useful for compute-heavy text processing tasks, since it allows us to know how much time is remaining.

from tqdm import tqdm

In order to use it with pandas we have to register it:

tqdm.pandas()

Dataset Overview¶

In this project we will be creating a model which can classify Amazon product reviews. The data is obtained from here.

We will focus on the Appliances section. To download the data with the command line you can do the following:

wget http://deepyeti.ucsd.edu/jianmo/amazon/categoryFilesSmall/Appliances_5.json.gz

import gzip

import json

def parse(path):

g = gzip.open(path, 'rb')

for l in g:

yield json.loads(l)

def getDF(path):

i = 0

df = {}

for d in parse(path):

df[i] = d

i += 1

return pd.DataFrame.from_dict(df, orient='index')

reviews_data = getDF('../data/Appliances_5.json.gz')

Exercise

Imagine you are the data scientist who wrote those functions. How would you explain what they do to a beginner colleague, who barely knows Python? Your task is to enhance this function by writing several helpful comments and documentation1.

Let’s also see how many rows and columns we have:

reviews_data.shape

(2277, 12)

Let’s have a look at several random rows to get our bearings. For such data this will be more suitable than looking at the first rows, since there could be a big difference in different sections in the data. Another important argument here is the random_state, which we can set to an arbirtrary number. This is often used for such operations in machine learning, to ensure reproducibility. Every time you are running such code it will provide the same result 2.

reviews_data.sample(5, random_state=33)

| overall | verified | reviewTime | reviewerID | asin | style | reviewerName | reviewText | summary | unixReviewTime | vote | image | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1439 | 5.0 | True | 11 9, 2016 | AMY6O4Z9HINO0 | B0006GVNOA | NaN | J. Foust | The lint eater is amazing! We recently bought a house that was built in 2001 and previously owne... | Excellent choice for cleaning out dryer vent line. Worth every penny! | 1478649600 | 9 | NaN |

| 213 | 5.0 | True | 12 6, 2016 | A2B2JVUX5YN8RU | B0006GVNOA | NaN | Caleb | Works great. I used it and an extension kit with extra rods to to clean a 21 foot long vent with... | Worked like a charm | 1480982400 | 6 | [https://images-na.ssl-images-amazon.com/images/I/71rrhRfUnpL._SY88.jpg, https://images-na.ssl-i... |

| 1355 | 5.0 | True | 02 25, 2017 | A8WEXFRWX1ZHH | B0006GVNOA | NaN | Goldengate | I bought this last October and finally got around to cleaning our 15-foot dryer duct run. It's j... | A great device that has my dryer working well again. Simple instructions here. | 1487980800 | 311 | NaN |

| 1402 | 3.0 | True | 12 6, 2016 | A25C30G90PKSQA | B0006GVNOA | NaN | CP | first thing first: it works. the kit is great in what it consists of and the price is just right... | good kit with some caveats | 1480982400 | 6 | NaN |

| 1321 | 4.0 | True | 01 15, 2018 | A1WD61B0C3KQZB | B0006GVNOA | NaN | Jason W. | Great product but they need to include more rods in the kit. I had to buy a second set of rods a... | Works Great, Just Use Common Sense When Doing It!!! | 1515974400 | 4 | [https://images-na.ssl-images-amazon.com/images/I/71FdVLhvIUL._SY88.jpg, https://images-na.ssl-i... |

Exercise: Working with missing data

Data in the real world is often incomplete, with portions of it missing. There are different ways to deal with this issue (including omitting data or imputing it from other data points), but as a first step we need to know exactly how much and what data is missing.

Your task is to find and apply two methods for detecting missing data:

A numeric summary of missing data

A graphical representation of missigniness

We have named the column label in that way, since it is a common convention in machine learning.

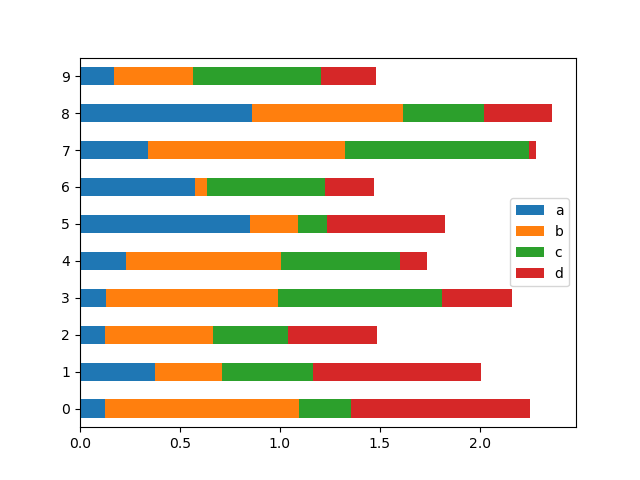

Our next step would be to have a look at the distribution of labels (“targets”) in our dataset. A common problem in machine learning is having an imbalanced dataset, which could lead to issues when training. This is called a “frequency count”, and is achieved by the value_counts() pandas method.

reviews_data["overall"].value_counts()

5.0 1612

3.0 421

4.0 222

2.0 13

1.0 9

Name: overall, dtype: int64

Additional information: Pandas visualisations

As part of the previous exercise you have used the built-in Pandas visualisaiton capabilities. There are many others that can be useful, and you can check them out on the official User Guide here (30 minutes).

And finally let’s select a sample review message that we will use to develop the following text processing capabilities. Here it is important to have a relatively normal sample, and not an outlier (i.e. an empty message), since that would make development difficult and would not genearlize well to other review messages in the dataset.

sample_text = reviews_data["reviewText"][333]

sample_text

'Works great. I used it and an extension kit with extra rods to to clean a 21 foot long vent with a two right angle turns and it did a fantastic job with the adapter that lets you put the rod through and attach a shop vac. Got a TON of lint out, about a 5 gallon bucket full. Inside of the vent was left shiny and clean. Good for safety especially with such a long vent and to my surprise the dryer actually works quite a bit better now. On the auto cycle I used to have to run it again if I was drying heavy things like jeans or blankets/comforters etc... to fully dry things. Now it does it in one cycle and take about 25% less time to go through the auto dry cycle.\n\nI was just wanting to clean out a potential fire hazard but it really does make a big difference running through a dirty vent vs. a clean one. Might be due to the long length of my vent but very happy with this product.'

Tokenization¶

A standard procedure when dealing with text data is to “tokenize” it. This means the conversation of the raw string as an input to something that is more easy to work with (i.e. loop over) in Python. The result of tokenization is called a “token”, and can be a sentence, or a word, since text is organized at least in those two levels (there are higher ones as well, such as as paragraphs).

nltk provides two handy methods that can tokenize a paragraph into sentences, and sentences into words. Let’s try them out:

sentences = nltk.sent_tokenize(sample_text)

sentences

['Works great.',

'I used it and an extension kit with extra rods to to clean a 21 foot long vent with a two right angle turns and it did a fantastic job with the adapter that lets you put the rod through and attach a shop vac.',

'Got a TON of lint out, about a 5 gallon bucket full.',

'Inside of the vent was left shiny and clean.',

'Good for safety especially with such a long vent and to my surprise the dryer actually works quite a bit better now.',

'On the auto cycle I used to have to run it again if I was drying heavy things like jeans or blankets/comforters etc... to fully dry things.',

'Now it does it in one cycle and take about 25% less time to go through the auto dry cycle.',

'I was just wanting to clean out a potential fire hazard but it really does make a big difference running through a dirty vent vs. a clean one.',

'Might be due to the long length of my vent but very happy with this product.']

words = nltk.word_tokenize(sentences[6])

words

['Now',

'it',

'does',

'it',

'in',

'one',

'cycle',

'and',

'take',

'about',

'25',

'%',

'less',

'time',

'to',

'go',

'through',

'the',

'auto',

'dry',

'cycle',

'.']

Exercise

A common dataset to work with in NLP is data from the social media giant Twitter. Since this type of data is quite different in format from normal text (i.e. the tweets often use non-standard abbreviations and emoji symbols), there are different ways to process them.

Find if there are methods in nltk and other libraries that can process tweet data better than the default ones.

Additional information: Visualising text data

While we were on the topic of data visualisation, most visualisation resources available are focused on other types of mostly tabular data. Still there are quite a few creative ways to visualise text. Have a look at the Text Visualization Browser for inspiration.

We can see that it worked. The . is also seen as a token, but we will deal with that later.

Stop Word Removal¶

An essential component of text data processing is the removal of entities that are not information rich. This should often be done with caution, since information richness can be defined by the domain expert. For example let’s take analysing Twitter data. For some application entities such as RT (re-tweets) can be seen as junk, and information poor, hence have to be removed, but if you are interested in graph networks that could be the most important part of the data, so you should keep it.

Still, for most applications there is one type of entities that is almost universally not needed - stop words. Let’s have a look at what those words are in English and Arabic to get a clearer understanding.

english_stop_words = set(stopwords.words("english"))

arabic_stop_words = set(stopwords.words("arabic"))

print(english_stop_words)

print(arabic_stop_words)

{'only', "isn't", 'didn', 'of', 'your', 'than', 'now', 'at', 'so', "didn't", 'ours', 'he', 'did', 'they', "mightn't", 'most', 'no', 'herself', 'll', 'because', 'can', 'yours', "shan't", 'our', 'which', 'won', 'above', 'don', "should've", "you're", 'during', 'why', 'while', 'by', 'both', 'below', 'on', 'wouldn', 'ma', 'until', 'after', 'nor', 'them', 'were', 'm', 'am', 'you', 'ain', 'be', 'there', 'not', 'or', 'whom', 'wasn', 'had', 'i', "wasn't", 'under', "doesn't", 'a', 'himself', "that'll", 'couldn', 'hadn', 'yourself', 'we', "aren't", 'up', "won't", 'o', 'is', 'ourselves', 'should', 'was', "wouldn't", 'into', 'itself', 'weren', "you've", 'where', 'themselves', 'with', 'are', 'she', 'do', "it's", "weren't", 'an', "hadn't", 's', 'these', 'their', 'few', 'shouldn', "haven't", 'doing', 'her', 'my', "needn't", 'each', 'been', 'have', 'needn', 'myself', 'through', 'isn', 'and', "don't", "hasn't", 'for', 'shan', 'it', 'does', 'him', 'over', 'hasn', 'who', 'own', "you'll", 'that', 'will', 'theirs', 'its', 'between', 'to', "mustn't", 'against', 'the', 'what', 'such', 'very', 'mustn', 'yourselves', 'again', 'all', 'this', 'about', 'being', 'y', 've', "you'd", 're', 'hers', 'those', "she's", 'out', 'then', 'once', "couldn't", 'has', 'here', 'same', 'but', 'how', 'too', 'aren', "shouldn't", 'd', 'when', 'haven', 'as', 'further', 'any', 'other', 'down', 'in', 'me', 'his', 'if', 'off', 'from', 't', 'just', 'doesn', 'more', 'some', 'before', 'having', 'mightn'}

{'عند', 'ليسوا', 'مه', 'آه', 'إليكن', 'لو', 'لكي', 'بكما', 'بعد', 'عليه', 'هاتين', 'بكم', 'كيفما', 'نعم', 'فإن', 'إلى', 'ألا', 'ذات', 'كي', 'منه', 'خلا', 'أولاء', 'اللواتي', 'كأن', 'إليكم', 'لكما', 'عل', 'كأي', 'وإذ', 'لستن', 'ذلكن', 'ثم', 'ذلكما', 'ريث', 'نحن', 'وإذا', 'تلك', 'ذوا', 'كذلك', 'ليت', 'كيت', 'إنا', 'بس', 'هكذا', 'لستما', 'ليستا', 'كما', 'لكم', 'لي', 'التي', 'اللائي', 'اللتيا', 'إنما', 'حيثما', 'هاته', 'أين', 'اللتين', 'إليكما', 'ذي', 'كليهما', 'تلكم', 'إما', 'لست', 'آها', 'بيد', 'الذي', 'أن', 'ذانك', 'بخ', 'قد', 'ما', 'ذلك', 'هذين', 'وإن', 'عن', 'إلا', 'اللذين', 'لم', 'مع', 'والذين', 'كأنما', 'كلتا', 'تين', 'منذ', 'أقل', 'اللتان', 'إذ', 'بل', 'بكن', 'عدا', 'هاتان', 'إنه', 'هاهنا', 'بمن', 'أما', 'لما', 'لئن', 'أيها', 'كل', 'منها', 'لك', 'اللاتي', 'هاك', 'هذه', 'هيت', 'إي', 'إيه', 'عليك', 'أنت', 'متى', 'ومن', 'إذا', 'أوه', 'أف', 'أم', 'بهن', 'كم', 'آي', 'حيث', 'أنتن', 'إذما', 'ذينك', 'هلا', 'هنالك', 'كيف', 'لكيلا', 'بك', 'عسى', 'لهما', 'ذواتا', 'حاشا', 'لن', 'ذلكم', 'أنتم', 'أو', 'كليكما', 'هو', 'أنا', 'والذي', 'على', 'أي', 'كذا', 'تينك', 'كأين', 'بين', 'بلى', 'لدى', 'لسن', 'لهن', 'ذان', 'ولو', 'من', 'أنتما', 'شتان', 'إن', 'هل', 'أولئك', 'به', 'ليس', 'تلكما', 'كلما', 'ذين', 'لهم', 'هناك', 'فلا', 'وهو', 'مهما', 'بنا', 'كلاهما', 'هما', 'ها', 'لا', 'فيم', 'أكثر', 'في', 'هيا', 'فيما', 'فإذا', 'ماذا', 'ممن', 'هي', 'هؤلاء', 'لولا', 'ليسا', 'هاتي', 'هذان', 'أينما', 'لكنما', 'غير', 'لاسيما', 'حين', 'هن', 'كلا', 'هيهات', 'هنا', 'ته', 'ذا', 'بهما', 'دون', 'ذو', 'سوى', 'لعل', 'مما', 'بما', 'إليك', 'هذي', 'هم', 'اللذان', 'سوف', 'فيها', 'وما', 'لها', 'هذا', 'بماذا', 'تي', 'فمن', 'بي', 'ولكن', 'لسنا', 'ليست', 'عما', 'بها', 'أنى', 'ثمة', 'إذن', 'الذين', 'بعض', 'ولا', 'لوما', 'نحو', 'لستم', 'حبذا', 'له', 'لنا', 'ذاك', 'ذه', 'حتى', 'ذواتي', 'لكن', 'مذ', 'فيه', 'بهم', 'يا'}

Now let’s use those lists to remove stopwords from our sample dataset. Note that here you can already see why we needed to tokenize the data.

cleaned_words = [word for word in words if word not in english_stop_words]

cleaned_words

['Now',

'one',

'cycle',

'take',

'25',

'%',

'less',

'time',

'go',

'auto',

'dry',

'cycle',

'.']

Stemming and Lemmatization¶

The following two methods go even further in removing unnecessary information from the text data. Both of those methods extract a smaller part of the words, which is containing the same information. The difference is that stemming just extracts the stem, while not making sure that this is a correct word in the language, but lemmatization does. Let’s try them out:

stemmer = PorterStemmer()

stemmed_words = [stemmer.stem(word) for word in cleaned_words]

stemmed_words

['now',

'one',

'cycl',

'take',

'25',

'%',

'less',

'time',

'go',

'auto',

'dri',

'cycl',

'.']

lemmatizer = WordNetLemmatizer()

lemmatized_words = [lemmatizer.lemmatize(word) for word in cleaned_words]

lemmatized_words

['Now',

'one',

'cycle',

'take',

'25',

'%',

'le',

'time',

'go',

'auto',

'dry',

'cycle',

'.']

# remove punctuation

final_processed_words = [word for word in lemmatized_words if word.isalpha()]

final_processed_words

['Now', 'one', 'cycle', 'take', 'le', 'time', 'go', 'auto', 'dry', 'cycle']

Blended learning

Read about the original stemming algorithm - the Porter Stemming algorithm (1 hour).

Blended learning

Go through the stemming and lemmatization tutorial from Stanford NLP (45 minutes).

Part of Speech (PoS) Tagging¶

This method can be used for text processing, but is much more powerful than the ones that we have covered so far. It can even be used as a result of an NLP project on its own. Part of speech tagging allows us to group the words in the data into the major linguistic categories. Those groupings can be then used for example to further trim and clean the data. But you could also go a step further and let’s say try to extract the names of a products in a dataset, and even use that information to build a separate classifier.

PoS tagging is more advanced than the other methods, and relies on pre-trained language models, which are accessible both in nltk and spaCy (which we will have a look at later). Those models are built on large corpora of text. We can try the default tagger from nltk and see how that works.

tagger = DefaultTagger("NN")

tagger.tag(words)

[('Now', 'NN'),

('it', 'NN'),

('does', 'NN'),

('it', 'NN'),

('in', 'NN'),

('one', 'NN'),

('cycle', 'NN'),

('and', 'NN'),

('take', 'NN'),

('about', 'NN'),

('25', 'NN'),

('%', 'NN'),

('less', 'NN'),

('time', 'NN'),

('to', 'NN'),

('go', 'NN'),

('through', 'NN'),

('the', 'NN'),

('auto', 'NN'),

('dry', 'NN'),

('cycle', 'NN'),

('.', 'NN')]

Bringing it all together¶

Now that we have covered the most important parts of a text processing pipeline, it is important to create a so called “master function”, that contains all of those together. What we have written so far works just on one tweet, and this would help us organize our code better and scale it for the whole dataset. Moreover, if we were to deploy the processing step as a part of an API, this would also be necessary (more on this you can learn in the Data Engineering module).

def process_text(text_input):

"""

"""

# we skip the sentence split, since for this example we don't need that information

words = nltk.word_tokenize(text_input)

words = [word.lower() for word in words if word not in english_stop_words]

words =[stemmer.stem(word) for word in words]

words = [lemmatizer.lemmatize(word) for word in words]

words = [word for word in words if word.isalpha()]

return ' '.join(words)

Exercise

Replicate the complete NLTK pipeline in spaCy, by creating a master function (2 hours).

Exercise

Create a function documentation with a docstring for the master process_text.

Now let’s see this function in action, and here we also have a perfect opportunity to use the tqdm progress bar indicator.

reviews_data["text_processed"] = reviews_data["reviewText"].progress_apply(process_text)

100%|██████████| 2277/2277 [00:14<00:00, 156.31it/s]

Let’s have a look if this worked:

reviews_data[["reviewText", "text_processed"]].sample(5)

| reviewText | text_processed | |

|---|---|---|

| 1308 | This review is for Gardus RLE202 LintEater 10-Piece Rotary Dryer Vent Cleaning System\n\nWeve be... | thi review gardu linteat rotari dryer vent clean system weve pay per visit servic guy applianc s... |

| 686 | We have 24 foot of solid dryer vent pipe ending in 3 right-angles before going through the floor... | we foot solid dryer vent pipe end go floor flexibl hose come dryer i ca get behind dryer without... |

| 1883 | Works great. I used it and an extension kit with extra rods to to clean a 21 foot long vent with... | work great i use extens kit extra rod clean foot long vent two right angl turn fantast job adapt... |

| 51 | Moved into new apt, needed dryer vent hose, this fit the bill. The compression clamps made insta... | move new apt need dryer vent hose fit bill the compress clamp made instal snap |

| 845 | I bought this last October and finally got around to cleaning our 15-foot dryer duct run. It's j... | i bought last octob final got around clean dryer duct run it type thing top list like wow i go s... |

Now let’s store this data, since we will be using it in the later parts of the module.

reviews_data.to_csv("../data/reviews_data.csv", index=None)

Advanced Text Processing with spaCy¶

So far all of the methods that we have been focusing at were part of the nltk library. They help us achieve most of the tasks, but if we were to start using them on larger datasets, in production or for more advanced purposes they will start to show their weaknesses, both in terms of functionality and performance.

This void in the NLP open source community was filled by the spaCy package, which has in the meantime grown to be extremely well maintained and used for large scale advanced and diverse NLP projects. This is why it makes sense to get familiar with it as well. Let’s have a look!

import spacy

from spacy import displacy

Similarly to the pre-trained PoS, or stemming models used in nltk, spaCy also needs to download some additional data beside the Python code. You would only need to do this once when setting up your NLP environment. For our purposes we need to download the English language model, again do this from your activated venv:

python -m spacy download en_core_web_sm

After this the following cell should be work without throwing and error:

nlp = spacy.load("en_core_web_sm")

The fundamental building block of a spaCy pipeline is the doc, let’s use a sample SMS to create that:

sample_text = reviews_data["reviewText"][525]

doc = nlp(sample_text)

doc

I bought this last October and finally got around to cleaning our 15-foot dryer duct run. It's just not the type of thing that's at the top of your list... like "Wow, should I go see a movie, or clean our dryer duct?" However, our dryer was drying slower and slower, and I knew it was a task that I needed to tackle, so I read the very long instruction book for this and began my work. The instructions really need to be re-written and simplified. My Sony TV has a smaller instruction manual. But I do appreciate the manufacturer's thoroughness. For those of you with short attention spans here's my quick start guide:

1. Screw the rods together and use electrical or duct tape to reinforce the joints to keep them from unscrewing during your work.

2. Stick one end of rod in your drill and set the clutch pretty close to the lowest or second lowest setting, then increase power if you need it.

3. Attached brush to other end of rod with supplied key wrench / allen wrench and tighten.

4. Make sure your drill is on clockwise - not reverse - mode or else the rods will unscrew inside your duct and you're screwed.

5. Read #4 again.

6. Turn dryer on air mode and then insert the brush in the pipe outside and begin spinning it by activating the drill. Push it in about a foot, then pull it out and watch the lint fly. Then push it in 2 feet and pull it out and watch the lint fly. Then 3 feet... etc.

7. Marvel at how much lint came out.

8. Marvel at how fast your dryer is and come write a review here.

9. Wash the clothes you were wearing when you did this because they are now covered in lint.

Hope this helps. It's a quick job and well worth it the trouble!

We can then use this object to extract further information, the verbs for example (PoS tagging):

print("Verbs:", [token.lemma_ for token in doc if token.pos_ == "VERB"])

Verbs: ['buy', 'get', 'clean', 'run', 'should', 'go', 'see', 'clean', 'dry', 'know', 'need', 'tackle', 'read', 'begin', 'need', 're', '-', 'write', 'appreciate', 'screw', 'use', 'reinforce', 'keep', 'unscrew', 'set', 'increase', 'need', 'attach', 'supply', 'tighten', 'make', 'will', 'screw', 'read', 'turn', 'insert', 'begin', 'spin', 'activate', 'push', 'pull', 'watch', 'fly', 'push', 'pull', 'watch', 'fly', 'marvel', 'come', 'marvel', 'come', 'write', 'wash', 'wear', 'cover', 'hope', 'help']

Note that we could already take the token’s lemma here. Or maybe we are looking for more interesting entities?

for entity in doc.ents:

print(entity.text, entity.label_)

this last October DATE

15-foot QUANTITY

Sony ORG

1 CARDINAL

2 CARDINAL

one CARDINAL

second ORDINAL

3 CARDINAL

key wrench / allen wrench PERSON

4 CARDINAL

5 CARDINAL

4 MONEY

6 CARDINAL

2 feet QUANTITY

3 feet QUANTITY

7 CARDINAL

8 CARDINAL

9 CARDINAL

We can of course also easily tokenize the data:

for token in doc[0:20]:

print(token.text)

I

bought

this

last

October

and

finally

got

around

to

cleaning

our

15-foot

dryer

duct

run

.

It

's

just

What makes spaCy even better is the built-in capability for rich and interactive visualisations. Let’s try to visualise the dependencies in our sample sentence:

# displacy.render(doc, jupyter=True, style="dep")

Another thing we can do is have a visual anotation of our entities in the text string:

displacy.render(doc, jupyter=True, style="ent")

There is a multitude of additional packages available for spaCy, from different contributors, which are not part of the main package. If you have some more custom needs, it makes sense to have a look there before reinventing the wheel. The directory listing of those packages is available here: https://spacy.io/universe.

Fig. 2 spaCy universe¶

Let’s have a look at how to install a tool from the Spacy Universe and use it. One topic which is often encountered in NLP is language detection. Let’s say we are trying to use a pre-trained sentiment model to predict the sentiment in a dataset. While reading the documentation we see that this language model just works on english, and would fail with another language. Here in order to deliver value we should be able to detect the language and then use that to subset the data.

There is a package in Spacy’s Universe that we can use, called spacy-langdetect. More information on it is available here.

from spacy_langdetect import LanguageDetector

nlp.add_pipe(LanguageDetector(), name='language_detector', last=True)

text = 'This is an english text.'

text_de = 'Und das ist ein Text auf Deutsch.'

doc = nlp(text)

# document level language detection. Think of it like average language of the document!

print(doc._.language)

# sentence level language detection

for sent in doc.sents:

print(sent, sent._.language)

doc = nlp(text_de)

# document level language detection. Think of it like average language of the document!

print(doc._.language)

# sentence level language detection

for sent in doc.sents:

print(sent, sent._.language)

{'language': 'en', 'score': 0.9999983530132899}

This is an english text. {'language': 'en', 'score': 0.9999967417718051}

{'language': 'de', 'score': 0.9999994746586149}

Und das ist ein Text auf Deutsch. {'language': 'de', 'score': 0.9999965013001413}

We can see that it worked perfectly!

Exercise

Explore what useful NLP resources are out there for Arabic texts.

Portfolio Projects¶

Portfolio Project: Swiss Army Knife for NLP

One of the most useful things one can do in their career as a data scientist is to create a vault of code snippets, materials, books and everything else - a personal knowledge base. Every time you solve a hard challenge with data, make sure to keep a note on how to do this in the future - it will probably not be the lest time you encounter the issue in your career.

The same goes for very repetitive tasks. After all automation is one of our main goals, so why not apply it to our toolkits? One procedure that you have now learned is text processing. The steps in the master function will be very similar from each NLP project to another. The goal of this portfolio project is to create a Python package containing your code so you can reuse it between projects. This package should contain not only the function, but also a few parameters and flags that can make it more flexible for general use.

Making a Python package is not easy, but there are good resources out there, for example the Python Packaging User Guide.

Portfolio project: Bilingual data processing

One of the bigger discussions in the current data science field is which language is best for data science. There are of course the two major contenders - R and Python. In our courserwork we cover both, and this is why the second possible portfolio project is to create a text processing function in R. You are free to use also other open-source tools, such as stringr.

Portfolio project: Security Council Data

The next dataset is very interesting for a variety of natural language applications, such as topic modeling, sentiment analysis and classification, and is ideal to demonstrate the challenges of working with text data in the real world. Thus, in order to be able to do more advanced data science workflows we need to process the data first.

Download and process the Security Council Transcriptions dataset (2-3 hours). Note: you will need to load it into R first and export to a proper format.

Glossary¶

- NLP

Natural Language Processing

- REPL

Read–eval–print Loop

Footnotes¶

- 1

Documentation is often not paid enough attention to in technical work, but it is an essential component of a successful project. You can read more about documenting Python code here.

- 2

Setting the random seed is a more complicated topic than you might think. Have a look at this post for more information.